What is G-Sync? A technology from Nvidia allows you to synchronize your graphics card’s refresh rate with your screen. G-Sync Ultimate or G-Sync compatible? What does this mean? I’m sure you’re familiar with Nvidia G-Sync technology, but opinions on its advantages vary.

Whether it’s Nvidia or AMD’s FreeSync technology, these solutions aim to synchronize the screen or television refresh rate with the graphics card.

This is to reduce or eliminate stuttering, shaking, and tearing of the image. Following Nvidia’s announcement at CES 2019, G-Sync can be enabled with FreeSync displays.

Here’s an overview of the monitors currently available in the market. You’ll also get to compare the prices of AMD’s technology and Nvidia.

In this article

What is Nvidia G-Sync?

All standards at a fixed monitor operate refresh rate. This means they run simultaneously at the same speed (frequency). For example, many screens are at 60 Hz, 60 images per second (or FPS).

While graphics cards (GPUs) do not work with a fixed frame rate, 3D scenes are more or less complicated to calculate, and the number of images calculated per second is variable.

It’s important to understand that the frames per second generated by your graphics card can vary. Due to this, your graphics processor is capable of effectively processing a significant number of frames.

So, a graphics processor can often produce images faster or slower than the monitor can display those same images. This difference between the images rendered and displayed between your GPU and your monitor, creates an image drop.

As shown in the image below, the misalignment of buildings is “Screen Tearing.”When your GPU creates frames (frames per second or FPS) at a rate lower than your monitor’s refresh rate, your monitor displays the next frame before it is fully rendered.

When the number of frames per second of your GPU is more significant than your monitor’s refresh rate, your monitor will start displaying the next image before the previous image finishes displaying.

In both cases, you, as the user, see what is known as Screen Tearing because you have the impression that your image is split.

VSync

VSync is a helpful feature that eliminates image splitting by synchronizing the graphics card output with the monitor’s refresh rate. By controlling the number of images in the buffer memory, VSync ensures a smoother and more seamless visual experience.

Which effectively eliminates screen breaks. However, this creates joint problems, such as jerkiness and latency (input lag).

Even though the graphics card never exceeds the monitor’s refresh rate, graphics cards do not output images at a fixed rate.

But they can eventually fall below the fixed monitor frequency. And stuttering happens when your graphics card fails to keep up with the monitor’s refresh rate.

When the first image is rendered, there is a delay before the next image is displayed, which causes the user to experience a stutter or jerk in the visuals.

G-Sync

G-Sync technology solves the issue of screen splitting, stuttering, and input lag. Instead of restricting the graphics card’s frame rate to match the monitor’s refresh rate, NVIDIA’s G-Sync allows the monitor to adjust its refresh rate based on the number of images produced by the graphics card.

So, with a G-Sync monitor, if your graphics card produces 75 frames per second (FPS), your monitor will operate at a refresh rate of 75Hz.

If you encounter a more demanding situation in a game that requires a decrease in your graphics card’s display speed, then G-Sync will lower your screen’s refresh rate.

This matches the new display frequency used at the output of the graphics processor. This eliminates screen tearing and stuttering. Also, it gives a feeling of fluidity and sharpness in-play games and working in graphic design & video editing.

Disadvantages of the Nvidia G-Sync

Although NVIDIA’s G-Sync technology works well and overall, it serves the purpose. It is not without drawbacks. Currently, users face two main issues with G-Sync: cost and compatibility.

Unlike other variable frame rate technologies (such as AMD’s Freesync ), NVIDIA’s G-Sync was initially a hardware-only solution.

This means that rather than using software to force the monitor to use a variable refresh rate, G-Sync monitors have an Nvidia circuit installed. Depending on the GPU, the latter allows them to operate at a variable refresh rate.

Including G-Sync chips in display manufacturing raises the cost of these displays. Comparing two monitors with the same features, one with G-Sync and the other with FreeSync reveals the price difference.

The G-Sync monitor used to be more expensive, but Nvidia has since recognized its error. They now offer a G-Sync compatible model to be more competitive and provide their technology on high-quality displays.

This allows you to activate the G-Sync of an Nvidia graphics card with a FreeSync monitor. However, there are a few limitations, which we will cover later in this article.

Type G-Sync Model

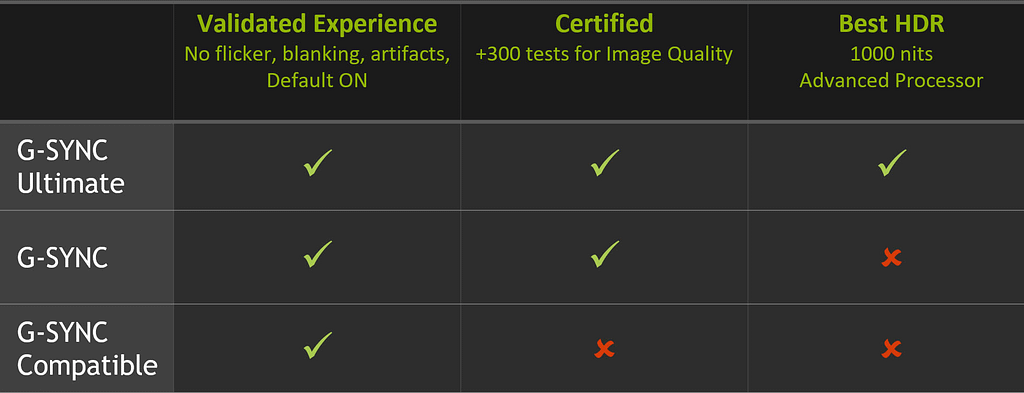

But as announced by Nvidia at CES 2019, a new G-Sync Compatible model has been added. Which makes three versions of Nvidia’s technology:

- G-Sync Ultimate (formerly G-Sync HDR)

- G-Sync: This means the monitor contains the Nvidia chip

- G-Sync Compatible: The monitor does not contain the Nvidia chip. But G-Sync is enabled by default. This label means that the monitor has FreeSync or AdaptiveSync technology. But also that the tests carried out by Nvidia were conclusive.

We can even add a fourth version. Since it is possible to activate it manually, start G-Sync with a FreeSync monitor. This is on FreeSync monitors that have not passed the test or have not yet been tested.

For a range of the great budget monitor, check out our guide to the best vertical monitor for coding and gaming

What is G-Sync Compatible?

This label indicates that the FreeSync or Adaptive Sync monitor has passed Nvidia’s tests. G-Sync will then be automatically activated by the graphics card.

The monitor does not display blinking, flickering, ghosting, or other objects with VRR (Variable Refresh Rate). They also confirm that the monitor has a wide range of VRR activations.

Specifically, the VRR range should be at least 2.4: 1 (e.g.60Hz-144Hz). Finally, the last criterion is that it must offer the player a transparent experience by activating the VRR by default.

G-Sync with an AMD GPU?

If you already have an AMD graphics card or are planning to get one, you won’t be able to use NVIDIA’s G-Sync technology. This Nvidia technology can only be used by graphics cards of the same brand.

FreeSync is an open-source software solution based on the Adaptive Sync model. FreeSync-compatible monitors are, therefore, much cheaper than monitors with Nvidia’s chip.

What is Nvidia G-Sync Ultimate?

It continues the G-Sync model, a move upmarket Old known as G-Sync HDR. On the one hand, AMD released a FreeSync 2. The latter dramatically improves the FreeSync model with several features, including HDR management.

The lack of rigor of the FreeSync 1 model is partly corrected with this new version. One of the drawbacks of FreeSync is that you have to be careful when choosing the monitor at the frequency range of FreeSync.

Nvidia has recently launched a new version of G-Sync that includes HDR management.

Nvidia G-Sync, is it worth it?

The answer to the question is that it depends on the user. You need to have a substantial budget to benefit from G-Sync fully. And you must also have an NVIDIA GPU.

However, with the “G-Sync Compatible” model, you can cut the budget and purchase a Freesync monitor. But we still have a little prospect on this use. If you’re building a new computer, getting an NVIDIA graphics processor won’t be a problem.

If you have an AMD graphics card on your computer and are experiencing issues, there are a few possible solutions. You could choose to switch to an NVIDIA graphics processor, but this may require additional expenses. Another option is to consider a Freesync monitor.

G-Sync monitors are ideal for people with high-end systems with NVIDIA graphics cards. Otherwise, those with a sufficient budget can build a new high-end gaming computer (with an NVIDIA graphics processor).

Unfortunately, many gamers cannot use G-Sync due to the high costs. However, it’s well worth it if you have the budget to accommodate a monitor with Nvidia’s chip and the hardware to run it.

Compatible Monitor and prices

You won’t find a cheap G-Sync monitor. Unfortunately, that’s the price you pay for this technology. But as we said before, you can choose a FreeSync monitor listed as compatible to keep the price down.

However, if you already have an Nvidia GPU or are a fan of Nvidia, this technology will bring you a lot in visual quality and fluidity. So, to get an idea, here is a list of several G-Sync monitors with the associated prices.

If you want to change GPUs, the guide to choosing the right graphics card between Nvidia and AMD can be helpful to you.